Artificial Intelligence (AI) Ethics

Artificial Intelligence (AI) is growing fast. It is used in phones, cars, hospitals, banks, and even government offices. AI can save time, reduce errors, and bring new ideas. But AI also brings problems. These problems are not about machines alone, they are about people, values, and society.

When we talk about AI ethics, we mean the rules and principles that guide how AI should be built and used. It is about making sure AI is fair, safe, private, and accountable. It asks questions such as: Is AI treating everyone equally? Is it protecting personal data? Who is responsible if something goes wrong?

This guide explains the ethical challenges of AI in simple language. It covers issues like bias, privacy, job loss, surveillance, and misuse. It also shows how companies, governments, and individuals can act responsibly. If AI is built with ethics in mind, it can help people. If not, it can create harm and increase inequality.

Check our AEO Services to rank your business today and stand out in the competitive market.

1. The rise of artificial intelligence

AI is now part of daily life. It recommends music, routes traffic, flags fraud, and helps doctors read scans. The tools behind it include machine learning, computer vision, and language models. These tools learn patterns from data and use those patterns to make predictions.

Growth is fast. Companies adopt AI to save time and cut costs. Governments test AI to improve services. This brings benefits but also real risks. When systems scale to millions of people, small mistakes can become big harms.

Want to learn what is AEO in SEO? We have a detailed article on that.

2. Main ethical problems

2.1 Bias in AI

AI learns from data. If the data has unfair patterns, the system can repeat those patterns. This can harm people when decisions involve jobs, loans, housing, or policing. Bias often comes from past records that reflect old inequalities.

Fixing bias needs work at each step. Teams must choose better data, test results for different groups, and correct gaps they find. Independent review helps. So does listening to communities affected by the decisions.

2.2 Privacy and surveillance

AI often needs large amounts of personal data. That data can come from phones, websites, cameras, and social media. People do not always know what is collected or how long it is kept. This creates risk of misuse, leaks, or tracking without clear consent.

Good privacy practice is simple to say and hard to do. Collect less data. Use it for clear reasons. Protect it with strong security. Give people choices and a way to say no. Delete data that is no longer needed.

2.3 Accountability and responsibility

When an AI system causes harm, someone should answer for it. Today the line is blurry between the model builder, the company that bought it, and the person who used it. Without clear rules, victims may be left with no remedy.

Accountability needs records and review. Systems should log how decisions were made. Human supervisors should approve high risk uses. Contracts and laws should name who is responsible and how people can appeal.

2.4 Jobs and the economy

AI can replace some tasks and change others. Routine, repeatable work is most at risk. New roles will appear, but they will need new skills. This shift can be stressful for workers and local economies.

Fair transitions matter. Companies can invest in training. Governments can fund learning programs and safety nets. People who lose tasks should get a path to better work, not just a notice of change.

2.5 Transparency and explainability

Many AI systems are hard to understand. People may not know why a score was low or why an application was rejected. This hurts trust and makes it hard to challenge a mistake.

Basic steps help a lot. Give plain-language reasons. Show which data points mattered. Offer ranges, examples, and an appeal path. Publish simple model cards that describe limits and known risks.

2.6 Safety and reliability

AI should work as intended and fail safely. Risks include wrong outputs, security attacks, and misuse for harmful goals. Small errors can have large impact when systems scale.

Safety starts before launch and continues after. Teams should stress-test models, simulate attacks, and monitor live behavior. High-risk systems should have a visible “stop” control and a clear incident plan.

2.7 Power and inequality

AI can increase the power of large firms and governments. Those who control data and compute can set terms for others. Communities with fewer resources may have little voice in how systems affect them.

Ethical practice means sharing benefits and limiting harms. Include affected groups in design. Use open standards where possible. Support research and tools that small organizations can use.

2.8 Environment and energy use

Training and running large models can consume a lot of energy and water. Hardware production also has a footprint. These costs are often hidden from the public.

Teams can reduce impact by using efficient models, cleaner data centers, and shared infrastructure. Reporting energy use and efficiency helps people compare options and push for better practice.

3. Unethical uses of AI

Some uses do clear harm. Mass surveillance tracks people without consent. Deepfakes can spread lies and damage reputations. Manipulative ad systems can target vulnerable groups. Autonomous weapons raise life-and-death risks without human judgment.

Guardrails are needed. Ban certain uses. Require strict oversight for others. Test systems for abuse before release. Build detection tools for fakes and misuse.

In current technological world you must Master Ai so you can use it in best etchical ways possible.

4. Ethics in key sectors

4.1 Healthcare

AI can read scans, predict risks, and help plan treatments. It should not replace human care for serious decisions. Patients deserve explanations they can understand and a chance to ask questions.

Privacy is critical. Medical data must be protected. Systems should be tested on diverse groups so results are fair for everyone, not just for one population.

4.2 Education

AI tutors and grading tools can help teachers and students. But they can also be unfair if the data is narrow or if the system guesses wrong. Children’s data is especially sensitive and needs extra protection.

Schools should give notice, allow opt-outs where possible, and keep a human in the loop. Teachers must be able to review and correct AI decisions.

4.3 Finance and credit

Banks use AI to score credit and detect fraud. These decisions must be fair and explainable. A person who is denied should receive reasons and a simple way to appeal.

Firms should test models for bias by gender, race, age, and location. Regulators should audit systems and require records of model changes.

4.4 Policing and justice

AI can aid investigations, but errors here can cost freedom. Face systems and predictive tools can be biased and should never be the only basis for arrest, bail, or sentencing.

Use should be narrow, transparent, and subject to warrants and audits. Communities should have a voice in whether and how tools are used.

5. How to build ethical AI

Start with purpose and risk. Write down what the system should and should not do. Map who could be harmed. Name who is responsible for outcomes and reviews.

Use data governance. Collect only what you need. Balance datasets. Remove sensitive features when they are not required. Document sources and permissions.

Test for fairness and safety before launch and on a schedule after. Compare results across groups. Red-team the system against misuse. Monitor in production and fix issues fast. Provide explanations and an appeal path. Keep a human in the loop for high-risk cases.

6. Roles and duties

Tech companies should build safe systems, test for bias, explain decisions, and accept audits. Governments should make clear rules for privacy, safety, and fairness, and enforce them.

Standards bodies can set shared testing methods. Universities and civil groups can study impact and report harms. Individuals can ask for reasons, use privacy controls, and report problems.

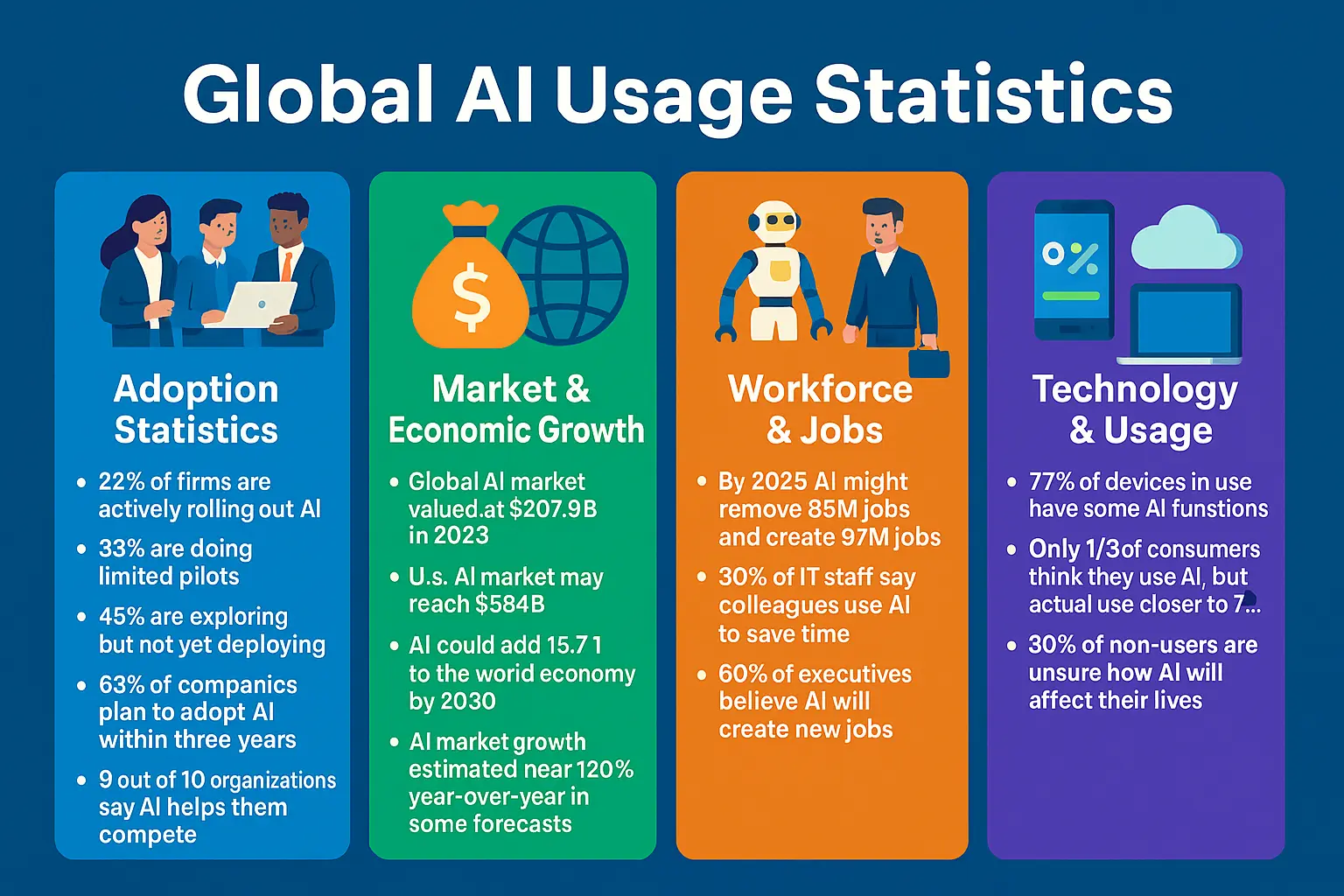

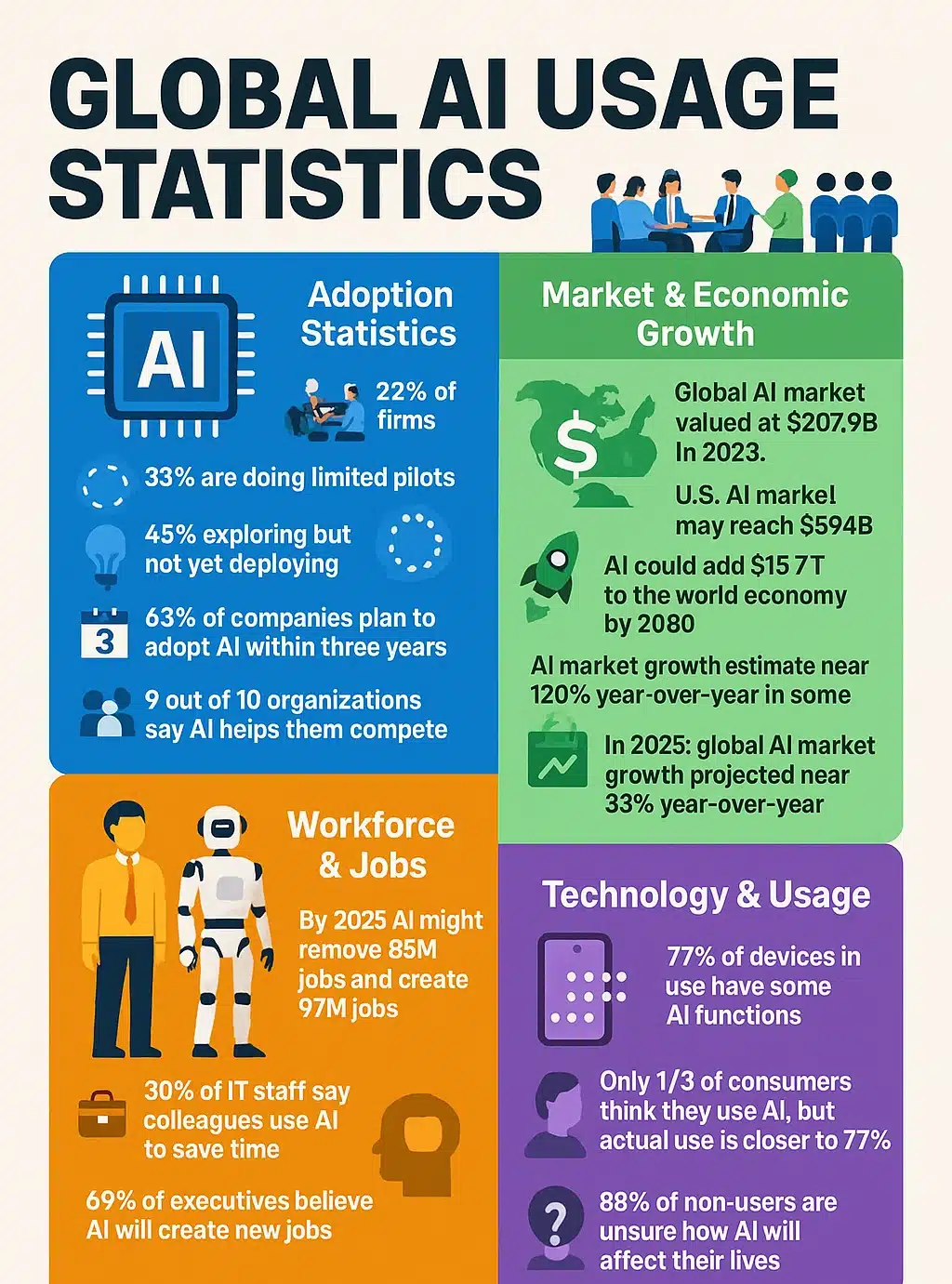

7. Statistics snapshot

These figures show how quickly AI is spreading and why ethics matters.

• 22 percent of firms are actively rolling out AI

• 33 percent are doing limited pilots

• 45 percent are exploring but not yet deploying

• Global AI market was about 207.9 billion dollars in 2023

• The United States AI market may reach about 594 billion dollars by 2032

• AI could add 15.7 trillion dollars to the world economy by 2030

• By 2025 AI might remove 85 million jobs and create 97 million jobs

• 30 percent of IT staff say colleagues use AI to save time

• 69 percent of executives believe AI will create new jobs

• 77 percent of devices in use have some AI functions

• 9 out of 10 organizations say AI helps them compete

• 63 percent of companies plan to adopt AI within three years

• Some estimates show AI market growth near 120 percent year over year

• In 2025 global AI market growth is estimated near 33 percent year over year

• 88 percent of non-users are unsure how AI will affect their lives

• Only one third of consumers think they use AI but real use is closer to 77 percent

8. Frequently asked questions

What does artificial intelligence ethics mean?

Artificial intelligence ethics means the rules and values that guide how AI should be built and used. It is about making sure AI is fair, safe, and responsible. Ethics makes sure that AI serves people instead of harming them.

For example, if AI is used to decide who gets a loan, it must not be biased against gender, race, or age. If AI is used in healthcare, it must protect patient privacy. Ethics is the foundation that makes these systems trustworthy.

Why is AI ethics important?

AI is already part of daily life. It can influence hiring, shopping, medical decisions, and even what news we read. If AI is not used in the right way, it can create unfair outcomes, invade personal privacy, or lead to harmful mistakes.

Clear ethics help people trust technology. When AI is built with fairness and responsibility, it can support better decisions, protect human rights, and improve society. Without ethics, AI could create inequality and fear instead of progress.

What are the main problems with AI?

The biggest ethical problems with AI include bias in algorithms, privacy risks, loss of jobs, lack of accountability, and harmful uses like surveillance or spreading fake news. These issues do not come from machines alone but from the way humans design and use them.

For example, biased data can make AI systems discriminate in hiring. Weak privacy rules can allow companies to track people without consent. Without accountability, victims of AI mistakes may never find justice. These problems show why ethics is essential.

How do AI ethics affect my daily life?

AI ethics affect many everyday choices. They can decide whether a credit score is fair, whether an online job test is unbiased, or whether medical data is kept private. If AI is used unethically, people may be denied opportunities or lose their freedom without even knowing why.

On the other hand, ethical AI can make life easier. It can improve healthcare, make transport safer, and even support better education. The difference comes from how carefully AI is guided by ethical rules.